Autonomous Robotic Assistance: Intent-Driven Object Retrieval System

Capstone Project | MS Robotics Engineering | Worcester Polytechnic Institute

In collaboration with Brigitte Broszus, Keith Chester, & Bob DeMont

LinkedIn link below has our final presentation & my technical report.

“I need my boots.”

My Technical Highlights

🤖 Autonomous Navigation & Mapping

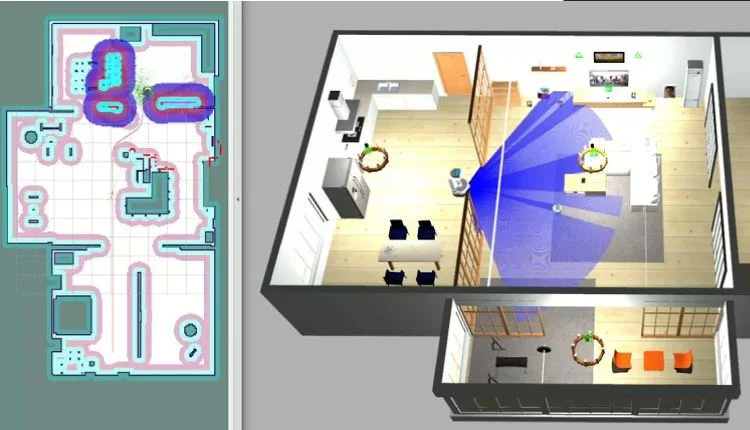

Implemented SLAM (Simultaneous Localization and Mapping) using ROS2 Nav2 framework

Integrated sensor fusion between wheel odometry, IMU, and LIDAR data

Optimized real-time performance through selective sensor data fusion

🔄 System Integration

Designed modular ROS2 architecture for perception, planning, and control

👁️ Object Recognition System

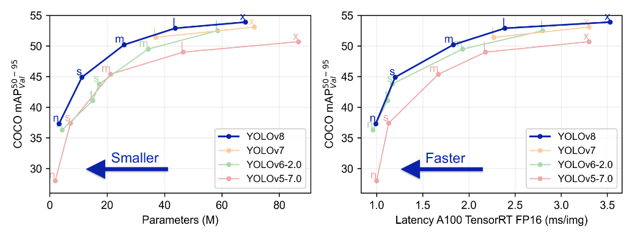

Deployed YOLOv8n for real-time object detection at 15Hz

Evaluated speed-accuracy trade-offs for real-world deployment

Abstract

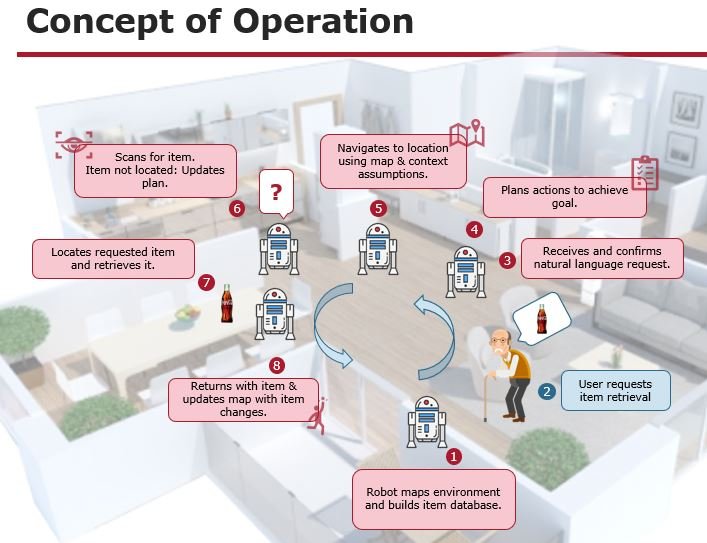

Developed an end-to-end autonomous robotic system that bridges the gap between natural language commands and physical object retrieval in unstructured home environments.

This project tackled three core robotics challenges:

robust state estimation in dynamic environments

real-time object recognition

efficient task planning

The system architecture integrates ROS2 Navigation2 framework with a custom state estimation pipeline, fusing data from a SICK TIM571 LIDAR (15Hz), 6-axis IMU (200Hz), and wheel encoders (100Hz) through an optimized Extended Kalman Filter. Key innovations include selective sensor fusion to minimize redundant angular velocity data while maintaining pose accuracy (±0.15m position error), and a modified local planner tuned for constrained indoor spaces.

The perception pipeline combines LIDAR-based SLAM with YOLOv8n object detection, achieving 15Hz processing rate while managing computational constraints. Custom ROS2 message types were developed to handle object pose estimation and state management, with optimized transform tree updates to maintain real-time performance.

System validation in a simulated home environment demonstrated 85% navigation success rate. The project provides a foundation for autonomous home assistance.

Technical Stack: ROS2, Nav2, YOLOv8, Python, Gazebo Simulation, Docker, Git

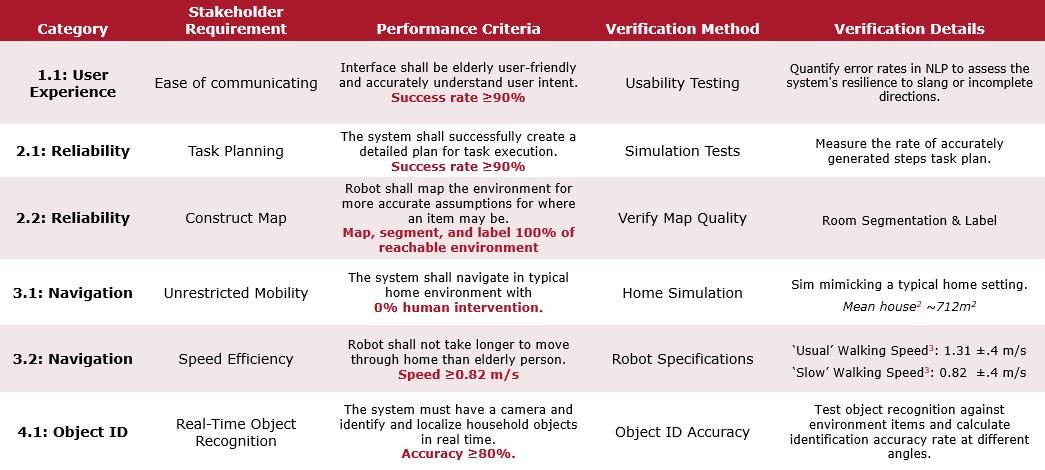

System & Performance Requirements

The system architecture follows fundamental software design principles of separation of concerns and modular design, decomposing the robotic system into distinct functional blocks (perception, planning, control) with well-defined interfaces. This modular approach enables independent development and testing of components, facilitates fault isolation, and allows for future system expansion while maintaining clear boundaries between high-level planning and low-level control. The architecture implements a message-passing paradigm through ROS2's pub/sub system, carefully designed to minimize latency in critical paths, manage bandwidth through appropriate message filtering, and ensure data consistency across distributed components operating at varying update rates (15Hz vision, 200Hz IMU, 100Hz encoders).

Robot Selection - Fetch

I selected the Fetch robot as our development platform due to its differential drive base equipped with a SICK TIM571 LIDAR (15Hz, 270° FOV), 6-axis IMU (200Hz), and high-precision wheel encoders (100Hz), providing comprehensive sensor coverage for state estimation and navigation tasks. The platform's ability to achieve velocities above typical human walking speed (>0.85 m/s) while maintaining a form factor compatible with standard doorways (base diameter 0.56m) made it ideal for indoor assistance scenarios, with the added benefit of future manipulation capabilities through its 7-DOF arm.

Sensor Integration Architecture

I implemented the sensor suite through a carefully planned URDF configuration, based on the actual Fetch robot product specifications, combining multiple sensing modalities for robust perception:

LIDAR CONFIGURATION

The SICK TIM571 LIDAR was configured for optimal indoor navigation with:

15Hz update rate matching hardware specifications

360 samples across ~140° FOV (-1.2 to 1.2 rad)

Range bounds of 0.12m to 5.0m for indoor environments

Gaussian noise model for realistic simulation

IMU INTEGRATION

Implemented a high-frequency (100Hz) IMU with:

Carefully tuned noise parameters for realistic behavior

Full 6-DOF measurements

Bias modeling for angular velocity and linear acceleration

Strategic mounting location on base_link for optimal motion capture

Nav2 Integration

The integration of Nav2 with the Fetch platform revealed critical challenges in state estimation, primarily centered around sensor fusion in the EKF node. The initial configuration attempted to fuse odometry and IMU data:

This configuration led to significant drift between the odom and map frames, despite attempting to fuse complementary data sources. The core issue emerged in the coordinate frame transformations, where inconsistencies between the odometry-derived pose estimates and IMU-based orientation corrections created cumulative errors in the robot's state estimation.

To maintain project momentum, development shifted to a Turtlebot3 platform that I already configured with validated Nav2 parameters.

Computer Vision Integration

The perception system leverages YOLOv8n (nano), the lightweight variant of YOLOv8, chosen specifically for real-time robotics applications.

Key architectural advantages of YOLOv8n for our robotics application:

Anchor-free detection reducing computational overhead

15Hz processing rate achieved on modest compute hardware

The selection of YOLOv8n over larger variants represented a careful balance between detection accuracy (mAP 37.3% on COCO) and real-time performance requirements for robotic object retrieval. The lightweight architecture proved particularly suitable for our resource-constrained robotic platform.

While the YOLOv8n architecture initially appeared promising for our robotic perception needs, simulation testing revealed significant limitations:

Key Implementation Challenges:

Poor detection performance on simulated household objects due to the domain gap between COCO dataset training and Gazebo's rendered environments

Object class mismatches between YOLOv8n's pre-trained categories and our specific use case

This experience highlighted a critical lesson in robotics perception: while deep learning models like YOLOv8n excel with real-world data, their performance can degrade significantly in simulated environments, or not fine-tuned on specific application data.

Technical Learnings

Critical Insights:

State Estimation:

EKF parameter tuning significantly impacts navigation reliability

Sensor synchronization crucial for accurate pose estimation

Perception:

YOLOv8n requires environment-specific training for robust performance

Depth integration improves object localization accuracy

Proposed Future Development:

Proposed Technical Improvements:

Implement UKF for better non-linear state estimation

Develop custom object detection training pipeline

System Enhancements:

Integrate manipulation planning

Develop comprehensive system tests